Configuring a server side trace

Nov 1st

When I'm after SQL Server performance problems, SQL Server Profiler is still my number one tool. Allthough I know that extended events provide a mor lightweight solution, those are still a bit cumbersome to use (but I've seen that we can expect some improvements with SQL Server 2012).

When I'm using profiler to isolate performance issues, I try to configure server side traces, whenever possible. Fortunately, SQL Server Profiler will help you creating a script for a server side trace (File/Export/Script Trace Definition), so you don't have to figure out all the event- and column-codes. Very good!

As I was doing the same configuration again and again, I decided to separate the TSQL code for the configuration inside a stored procedure.

And here comes dbo.configureServerSideTrace:

| if object_id('dbo.configureServerSideTrace', 'P') is not null |

Some annotations:

- For the parameters, see the comments.

- Don't specify a filename extension for the trace file. .TRC will be added automatically.

- Ensure that the output file does not already exist. Otherwise you'll get an error.

- Very often I replace the code for starting and stopping the trace inside "interesting code" inside a stored procedure. That is, I'm wrapping some more or less awkward code by starting and stopping a trace like this:

| declare @traceID int |

Cheers.

Calculating SQL Server Data Compression Savings

Feb 27th

SQL Server 2008 Enterprise edition comes with an opportunity for storing table or index data in a compressed format which may save huge amount of storage space and - much more important - IO requests and buffer pool utilization. There's two different options for data compression, namely Row and Page level compression. This blog post is not concerned with how these two work internally and will also not explain the differences between the two. If you like to know more about this, you find much of useful information on the internet - including links to further articles (e.g. here, here, and here).

Whether compression is worth or not isn't an easy question to answer. One aspect that has to be taken into account is certainly the amount of storage that may be saved by storing a distinct table or index in any of the two compressed formats. SSMS offers a Data Compression Wizard than can provide storage-saving estimates for row or page level compression. From the context menu for a table or index just open Storage/Manage Compression. In the Combo box at the top select the compression type (Row or Page) and press the Calculate Button at the bottom. Here's a sample of a calculated saving for an index:

Unfortunately, SSMS does not offer an option for calculating estimated savings for more than one table or index at once. If you, let's say, would like to know the estimated storage savings of page level compression for you largest 10 tables, there's no GUI support in SSMS that will assist you in finding an answer. This is, where the stored procedure sp_estimate_data_compression_savings comes in handy. This procedure - as you may have guessed from its name - provides estimated savings for row or page level compression for any table or index. You have to provide the table or index as a parameter to the procedure. In other words: The procedure will only calculate the estimations for one table or index at a time. If you want to retrieve the calculations of more than one table or index as a result set, there's some more work to do, since the procedure has to be invoked multiple times. Here's a script that calculates the estimated savings of page level compression for the database in context.

|

-- Determine the estimated impact of compression |

The script calculates the impact of Page level compression but may easily be adapted to consider Row level compression instead. Please read the comments inside the script. Also, please notice that the script will only run on SQL Server Enterprise and Developer edition. All other editions don't provide the opportunity for data compression.

Here's a partial result retrieved from running the script against the AdventureWorksDW2008R2 database.

If you execute the script, please be aware that it may produce some extensive I/O. Running the script against your production database at business hours wouldn't be a very good idea therefore.

SQL Server Start Time

Feb 22nd

Have you tried finding out the time, your SQL Server instance has been started? There are some sophisticated solutions, like the one from Tracy Hamlin (twitter), which takes advantage of the fact that tempdb is re-created every time, SQL Server starts. Her solution goes like this:

|

select create_date |

Another answer to the question, I've seen sometimes on the internet queries the login time for any of the system processes:

|

select login_time |

This was my preferred way - until yesterday, when I discovered the following simple method:

|

select sqlserver_start_time |

Easy, isn't it? Interestingly though, every of the above three queries yields a different result. Here's a query with a sample output:

|

select (select sqlserver_start_time |

Result:

It seems the SQL Server service must be started first. Only after the service is running, tempdb is created followed by a subsequent start of all sysprocesses. I can't imagine that the diverse three times make any difference in practice, e.g. if you try finding out for how many hours your SQL Server instance is running. But out there may be existing applications that have to be aware of the difference.

Exploring SQL Server Blockings and Timeouts

Jan 16th

Last Thursday I was giving a presentation about information collection and evaluation of SQL Server Blockings and Timeouts at the regional PASS chapter meeting in Munich.

You may download the presentation as well as the corresponding scripts here (German only).

How useful is your backup?

Dez 9th

A backup is worth nothing, if you can't utilize it for restore.

You probably agree with this well known word of wisdom, don't you?

This week I had to learn another aspect - the hard way: There are many cases that may require a restore. Only one of those cases is recovering a database from a state of failure. Another situation may require access to legacy data of a meanwhile deactivated database, a database that doesn't exist anymore. In this case only a successful restore from an older backup, although necessary, may not be sufficient. Here's the story, why.

I was called by a customer who requested me to pull out two documents from a legacy (SQL Server 2000) database. We found an 8 year old backup we could rely on and restore worked well. Great! But then we discovered the documents had been stored in an IMAGE column and nobody had an idea how it had been encoded at the time it was stored. We soon recognized that we need the original application to get access to the documents, only to recognize that nobody had an idea where to find the installation package. Eventually I could find an 8 year old backup which included the old VB6 code. I could have used this code to rebuild the application and also an installation package only with considerable difficulties, because:

- The application uses some ActiveX components that I may not be able to find anymore.

- The original application run on Windows NT, so I may have to install this OS first, including the required service pack. Even if I find the installation CDs; I doubt, I will be able to find the appropriate device drivers but maybe, I can set up a virtual machine.

- I had to install Visual Studio 6, including the latest service pack and I had no idea where to find the installation CDs.

- The application may rely on deprecated SQL Server 2000 features. So I have to have a SQL Server 2000 installation on which the existing backup has to be restored. If it gets worse, I might even have to install the appropriate service pack to make the application working. I have no idea which service pack this would be, so there's a chance I have to experiment.

I recognized that all those steps require a big effort and will take some days to accomplish. If only we had built a virtual machine of at least one legacy client system before replacing all client PCs by newer machines.

So, I'd modify the introductory statement like this:

A backup is worth nothing, if you can't utilize the data that is contained inside this backup.

Multiple statistics sharing the same leading column

Nov 4th

SQL Server will not prevent you from creating identical indexes or statistics. You may think that everything is under your control, but have you ever added a missing index that has been reported by the query optimizer, or the DTA? If so, you will certainly have created duplicate statistics. Have a look at the following example:

|

-- create test table with 3 columns |

Before invoking the following SELECT command, allow the actual execution plan being displayed (Strg-M or selecting Query/Include Actual Execution Plan from the menu).

|

-- get some data |

If you look at the execution plan, you see that the optimizer complains about a missing index on column c2. The prognosticated improvement is about 99%, so adding the index it's certainly a good idea. Let's do it:

|

-- add missing index |

Perfect! The query runs much faster now and needs a lot fewer resources. But have a look at the table's statistics:

You see three statistics, one for the primary key, a second one for our created index ix_1, and a third one that was automatically created during execution plan generation for the first SELECT statement. This is the statistics, named _WA_Sys.. If the AUTO CREATE STATISTICS option is set to ON, the optimizer will add missing statistics automatically. In our little experiment, the optimizer had to generate this column statistics on column c2 in order to make some assumptions about the number of rows that had to be processed.

And here's the problem: When creating the index on column c2, a statistics on this column is also created, since every index has a corresponding linked statistics. That's just the way it works. At the time the index was added, the column statistics on c2 (that _WA_Sys. statistics) already existed. If you don't remove it manually, this statistics will remain there forever, although it is needless now. All it's good for is to increase maintenance efforts during statistics updates. You can safely remove this statistics by executing:

|

drop statistics t1._WA_Sys_... |

If you didn't think about this before, there's a chance that you'll find some of those superfluous statistics duplicates inside your database(s). Here's a query that finds index-related and column-statistics that match on the first column. Looking for matches on the first column is sufficient here, since the optimizer only automatically adds missing single-column statistics.

|

with all_stats as |

With that query at hand, you may easily find redundant statistics. Here's a sample output:

If you'd like to find out more about SQL Server statistics, you may want to check out my series of two articles, published recently on the Simple-Talk platform.

Part 1: Queries, Damned Queries and Statistics

Part 2: SQL Server Statistics: Problems and Solutions

It's free, so you might want to give it a try. Feel free to vote, if you like it!

Did you know: Aggregate functions on floats may be non-deterministic

Nov 2nd

One day some of the report-users mentioned that, every time they run a report, they get different results. My first idea was that there were some undergoing data changes, probably from a different connection/user, so this would explain it. But it turned out that no modifications were made. Even setting the database to read only did not help. Numbers in reports differed by about 20% with every execution.

Delving into it, I could isolate the problem. It was a single SELECT statement that, when invoked, returned different results. The numbers differed by up to 20% in value without any data changes being performed!

Have a look at the following sample. We create a test table to demonstrate what I'm talking about:

|

use tempdb |

The table has three columns, where the third column only serves the purpose of filling up the row, so the table contains more data pages.

Let's now insert 600000 rows into our table:

|

declare @x float |

The first INSERT statement adds 300000 rows with positive values for the two columns floatVal and decimalVal. After that, we insert another 300000 rows, this time with inverse signs. So in total, values for each of the two columns should add up to zero. Let's check this by invoking the summation over all rows a few times:

|

select sum(floatVal) as SumFloatVal |

And here's the result:

As for the DECIMAL column, the outcome is as expected. But look at the totals for the FLOAT column. It's perfectly understandable, the sum will reveal some rounding errors. What really puzzled me is the difference between the numbers. Why isn't the rounding error the same for all executions?

I was pretty sure that I discovered a bug in SQL Server and posted a regarding item on MSFT's connect platform (see here).

Unfortunately nobody cared about my problem, and so I took the opportunity of talking to some fellows of the SQL Server CAT team on the occasion of the 2009 PASS Summit. After a while, I received an explanation which I'd like to repeat here.

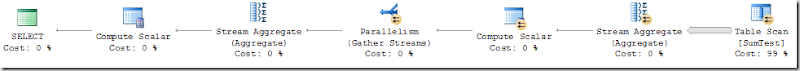

The query is executed in parallel, as the plan reveals:

When summing up values, usually the summation sequence doesn't matter. (If you remember some mathematics from school that's what the commutative law of addition is about). Therefore, reading values in multiple threads and adding up the values in any arbitrary order is perfect, as the order doesn't have any influence on the result. Well, at least theoretically. When adding float values, there's floating point arithmetic rounding errors with every addition. These added-up rounding errors are the reason for the non-zero values of the float totals in our example. So that's ok, but why different results with almost every execution? The reason for this is parallel execution. Added-up rounding errors depend on the sequence, so the commutative law does not really apply to these errors. There's a chance that the sequence of rows changes with every execution, if the query is executed in parallel. And that's why the results change, dependent only on some butterfly wing movements at the other side of the world.

If we add the MAXDOP 1 query hint, only one thread is utilized and the results are the same for every execution, although rounding errors still remain present. So this query:

|

select sum(floatVal) as SumFloatVal |

will be executed by using the following (single thread) execution plan:

This time the result (and also the rounding error) is always the same.

Pretty soon after delivering the explanation, the bug was closed. Reason: the observed behavior is "by design".

I can understand that the problem originates from computer resp. processor architecture and MSFT has no chance of control therefore.

Although.

When using SSAS' write back functionality, SSAS will always create numeric columns of FLOAT data types. There's no chance of manipulating the data type; it's always float!

Additionally, SSAS more often than not inserts rows into write back tables with vastly large resp. small values. When looking an these rows, it appeared that they are created solely with the intention of summing up to zero. We discovered plenty of these rows containing inverse values that usually should nullify in total, but apparently don't. By the way that's why closing the bug with the "By Design" explanation makes me somewhat sad.

So, probably avoiding FLOATs is a good idea! Unfortunately, this is simply not possible in all cases and sometimes out of our control.

No automatically created or updated statistics for read-only databases

Nov 1st

Ok, that's not really the latest news and also well documented. But keep in mind that database snapshots also fall into the category of read-only databases, so you won't see automatically maintained statistics for these as well.

I've seen the advice of taking a snapshot from a mirrored database for reporting purposes many times. Even books online contains a chapter Database Mirroring and Database Snapshots, where the scenario is explained.

The intention is to decouple your resource intensive reporting queries from the OLTP system which is generally a good idea. But keep in mind that:

- Reporting queries are highly unpredictable, and

- Reporting queries differ from OLTP queries.

So there's more than only a slightly a chance that query performance will suffer from missing statistics in your reporting snapshot, since the underlying OLTP database simply does not contain all statistics (or indexes) that your reporting applications could take advantage of. These missing statistics can't be added in the snapshot, because it's a read-only database. Additionally, you won't experience any automatic updates of stale statistics in the snapshot. And moreover, any added or updated statistics in the source database are not transferred into the snapshot, of course. This can affect your query performance significantly!

Have a look at the following example:

|

use master |

The script above creates a test database with one table and adds some rows to the table before creating a snapshot of this database. As we have never used column c2 in any query (besides the INSERT), there won't be any statistics for column t1.c2.

Now let's query the snapshot and have a look at the actual execution plan. Here's the query:

|

-- Be sure to show the actual execution plan |

Here's the actual execution plan:

Clearly evident that the optimizer detects a missing statistics, although the options AUTO CREATE STATISTICS and AUTO UPDATE STATISTICS have both been set to ON. The plan reveals a noticeable difference between the actual and estimated number of rows and also a warning regarding the missing statistics.

So, keep that in mind when using snapshots for reporting applications.

If you'd like to find out more about SQL Server statistics, you may want to check out my series of two articles, published recently on the Simple-Talk platform.

Part 1: Queries, Damned Queries and Statistics

Part 2: SQL Server Statistics: Problems and Solutions

It's free, so you might want to give it a try. Feel free to vote, if you like it!

How to treat your MDF and LDF files

Okt 29th

Have you ever set a database to read only? If so, you probably did this by using SQL Server Management Studio or by by executing the regarding ALTER DATABASE command.

Here's another method that I had to investigate recently: I colleague of mine with some limited knowledge of SQL Server didn't discover the ALTER DATABASE statement so far. But he knew how to detach and attach a database, because this is what he does all the time in order to copy databases from one computer to another. One day, when he wanted to prevent modifications to one of his databases, he decided to protect the MDF- and LDF- files of these database. Very straightforward he detached the database, set the MDF- and LDF-file to read only mode (by using Windows Explorer) and attached the database after completing this. Voila: SQL Server does not complain at all (I was very surprised about this) and as he expected, the database was displayed as read only in the Object Explorer of SSMS.

As I said: I was very surprised, since I didn't expect this method would work. Smart SQL Server! But then the trouble began.

Very shortly, after a system reboot, SQL Server started showing the database in question as "suspect". What happened? I don't know, but I was able to reproduce the behavior with SQL Server 2008 on Windows Server 2008 R2 every time I repeated the following steps:

- Create a database

- Detach the database

- Set the MDF- and LDF-file to read only

- Attach the database again

- Restart the computer

So, I think you should be careful with any modifications to MDF- and LDF-files outside SQL Server. This not only seems to be true for data itself but also for these files' attributes. You should always treat MDF- and LDF files as kind of SQL Server's exclusive property and never touch them!

Just another point to add here: If you try setting a database with read only MDF- or LDF files to read write again by executing ALTER DATABASE, you'll get an error like this:

Msg 5120, Level 16, State 101, Line 1

Unable to open the physical file "C:\SqlData\User\db2.mdf". Operating system error 5: "5(Access is denied.)".

Msg 5120, Level 16, State 101, Line 1

Unable to open the physical file "C:\SqlData\User\db2_log.LDF". Operating system error 5: "5(Access is denied.)".

Msg 945, Level 14, State 2, Line 1

Database 'db2' cannot be opened due to inaccessible files or insufficient memory or disk space. See the SQL Server errorlog for details.

Msg 5069, Level 16, State 1, Line 1

ALTER DATABASE statement failed.

But that's as expected, I'd say.